Exploring a variety of approaches: stain normalization, color augmentation, adversarial domain adaptation, model adaptation, and finetuning

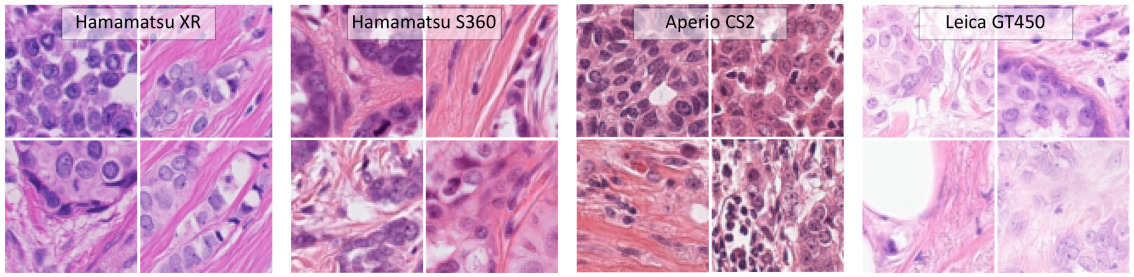

One of the largest challenges in histopathology image analysis is creating models that are robust to the variations across different labs and imaging systems. These variations can be caused by different color responses of slide scanners, raw materials, manufacturing techniques, and protocols for staining.

Different setups can produce images with different stain intensities or other changes, creating a domain shift between the source data that the model was trained on and the target data on which a deployed solution would need to operate. When the domain shift is too large, a model trained on one type of data will fail on another, often in unpredictable ways.

I’ve been working with a client who is interested in selecting the best object detection model for their use case. But the images their model will examine once deployed come from a different lab and a variety of scanners.

The domain shift from their training dataset to the target one is likely to be a larger challenge than getting state-of-the-art results on the training set.

My advice to them was to tackle this domain adaptation challenge early. They can always experiment with better object detection models later, once they’ve learned how to handle the domain shift.

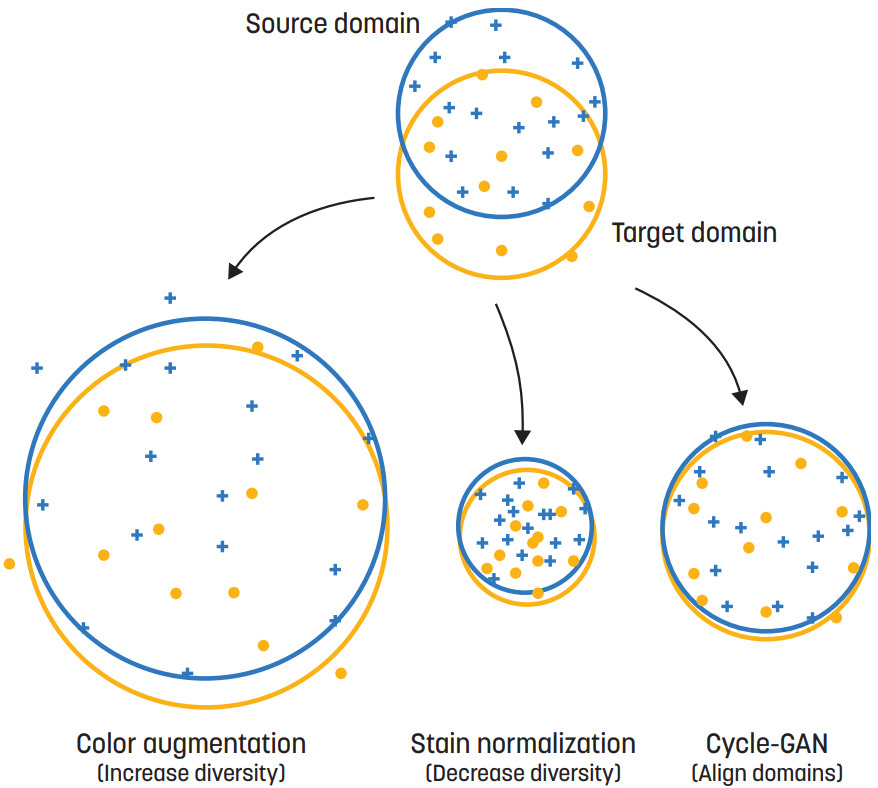

So how do you handle the domain shift? There are a few different options:

- Standardize the appearance of your images with stain normalization techniques

- Color augmentation during training to take advantage of variations in staining

- Domain adversarial training to learn domain-invariant features

- Adapt the model at test time to handle the new image distribution

- Finetune the model on the target domain

Some of these approaches have opposing goals. For example, color augmentation increases the diversity of images, while stain normalization tries to reduce the variations. Domain adversarial training tries to learn domain-invariant features, while adapting or finetuning the model transforms the model to be suitable for the target domain only.

This article will review each of the five strategies, followed by a summary of studies revealing which method(s) work best.

1. Stain Normalization

Different labs and scanners can produce images with different color profiles for a particular stain. The goal of stain normalization is to standardize the appearance of these stains.

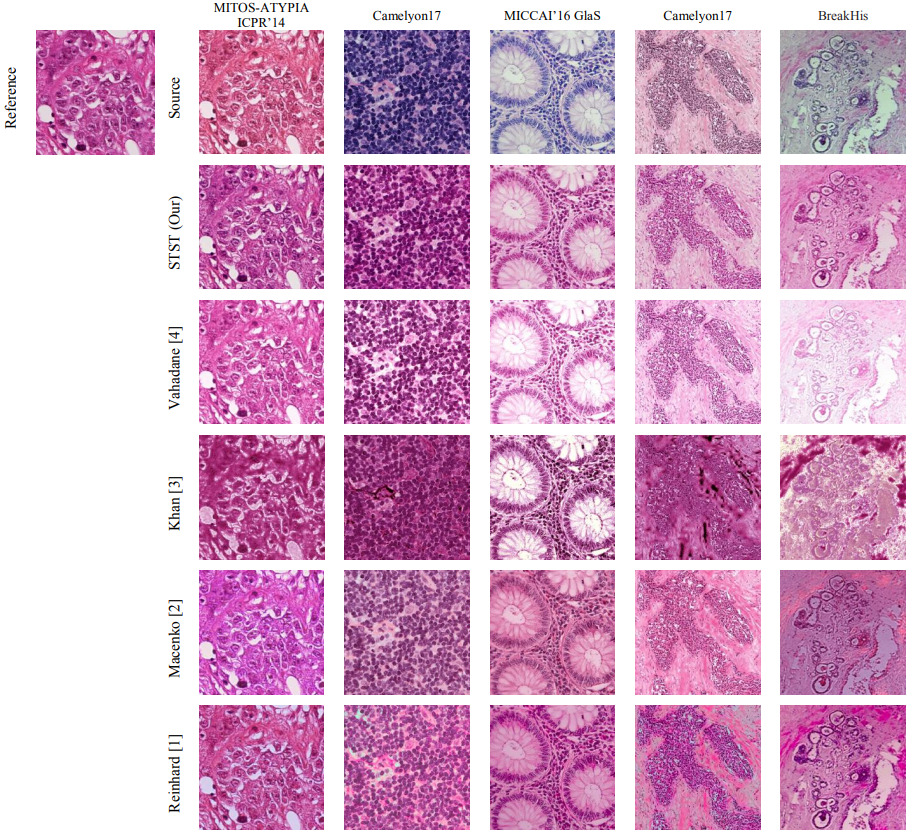

Traditionally, methods like color matching [Reinhard2001] and stain separation [Macenko2009, Khan2014, Vahadane2016] were used. However, these methods rely on selection of a single reference slide. Ren et al. have since shown that using an ensemble with different reference slides is one possible solution [Ren2019].

The larger problem is that these techniques do not consider spatial features, which can lead to tissue structure not being preserved.

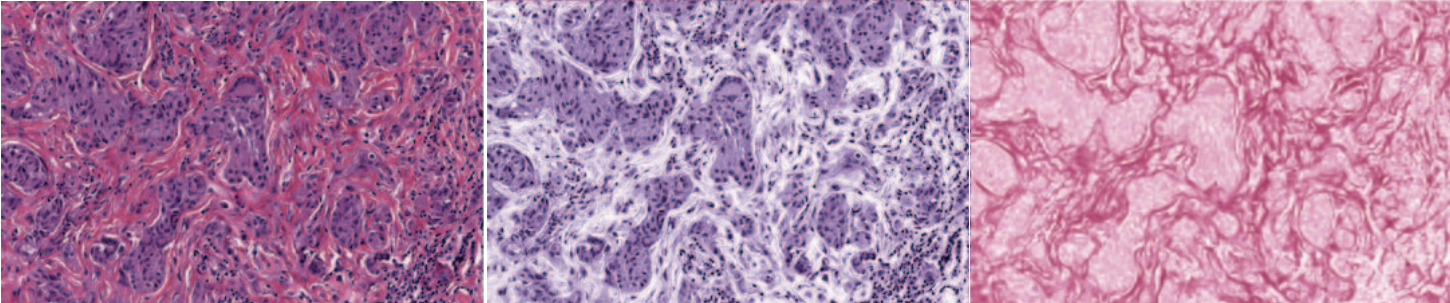

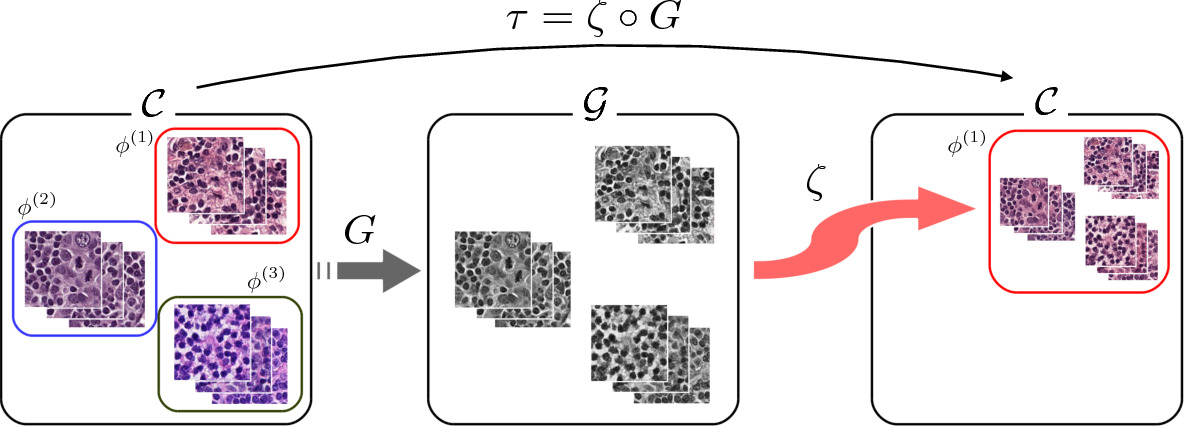

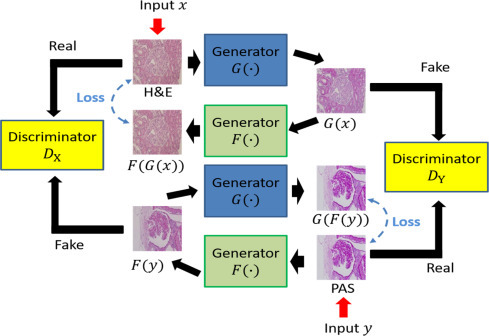

Generative Adversarial Networks (GANs) are the state-of-the-art in stain normalization today. Given an image from domain A, a generator converts it into domain B. A discriminator network tries to distinguish real domain B images from fake ones, helping the generator to improve.

If paired and aligned images from domains A and B are available, this setup performs well. However, it typically requires scanning each slide on two different scanners – or potentially even restaining and rescanning each slide.

But there is a simpler solution to obtaining paired images: convert a color image to grayscale (domain A) and pair it with the original color image (domain B) [Salehi2020]. The two are perfectly aligned and a Conditional GAN can be trained to reconstruct the color image.

One major advantage of this approach is that a restaining model trained for one particular domain may work for a variety of different labs and scanners as there is less variation in the input grayscale images than in color.

An alternative approach when paired images are not available is a CycleGAN [Zhu2017]. In this setup there are two generators: one to convert from domain A to B and another to go from domain B to A. The goal of these two models is to be able to reconstruct an original image: A -> B -> A or B -> A -> B. CycleGANs also makes use of discriminators to predict real versus generated images for each domain.

Stain normalization methods using deep learning have become increasingly complex. As a first pass to see if this type of standardization is helpful for your task, I suggest starting simple. StainTools and HistomicsTK both implement some of the color matching and stain separation methods.

These simpler methods are sufficient in some cases, but not all. The figure below demonstrates how different methods perform on five different datasets.

2. Color Augmentation

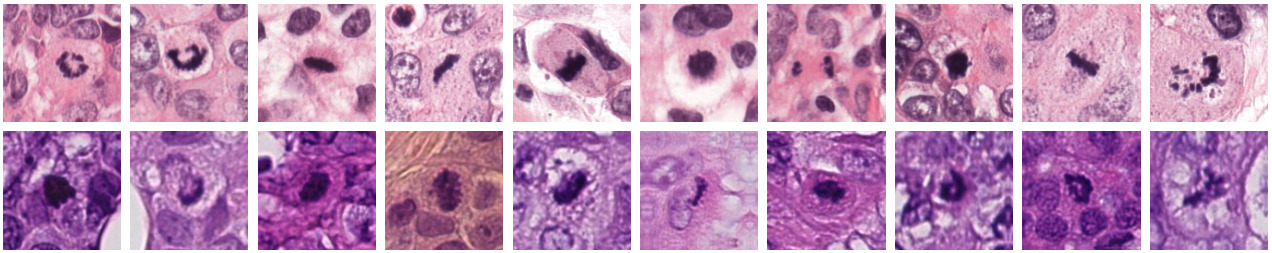

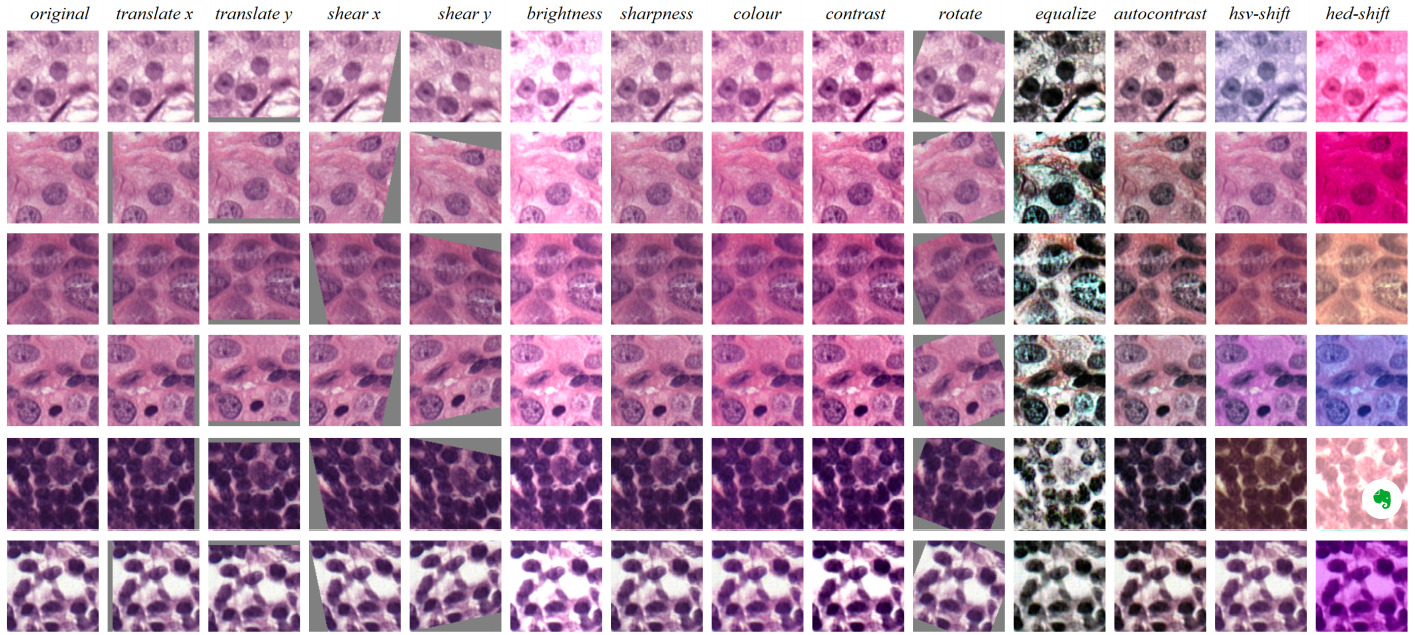

Image augmentation by applying random affine transforms or adding noise is one of the most common regularization techniques for combating overfitting. Similarly, variations in staining can be taken advantage of to increase the diversity of image appearance presented to the model during training.

While drastic changes in color are not realistic for histology, more subtle ones generated through random additive and multiplicative changes to each color channel have been shown to improve model performance.

The intensity of color augmentation is an additional hyperparameter that should be experimented with during training and validated on test sets from different labs or scanners.

Faryna et al. demonstrated the RandAugment technique on histopathology [Faryna2021]. This approach parameterizes augmentation as the number of random transformations selected and their magnitude.

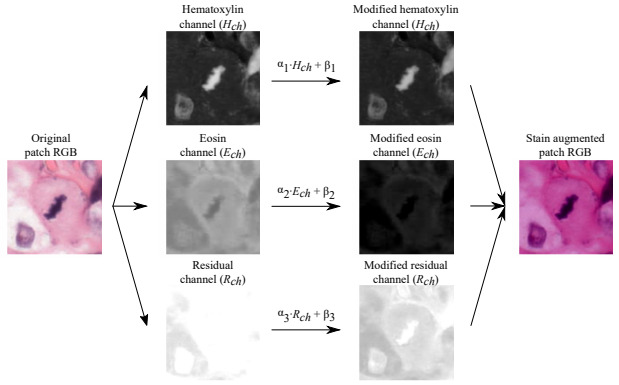

Tellez et al. studied the effect of different augmentation techniques (individually and combined) on mitosis detection and proposed an H&E-specific transform [Tellez2018]. They performed color deconvolution (as is used in the stain separation methods mentioned above), then applied the random shifts in hematoxylin and eosin space before converting back to RGB. The H&E transform was the best performing individual augmentation method. A combination of all augmentation methods was critical in generalizing performance to a new dataset.

3. Unsupervised Domain Adversarial Training

The next technique for domain adaptation is domain adversarial training [Ganin2016]. This approach makes use of unlabeled images from the target domain.

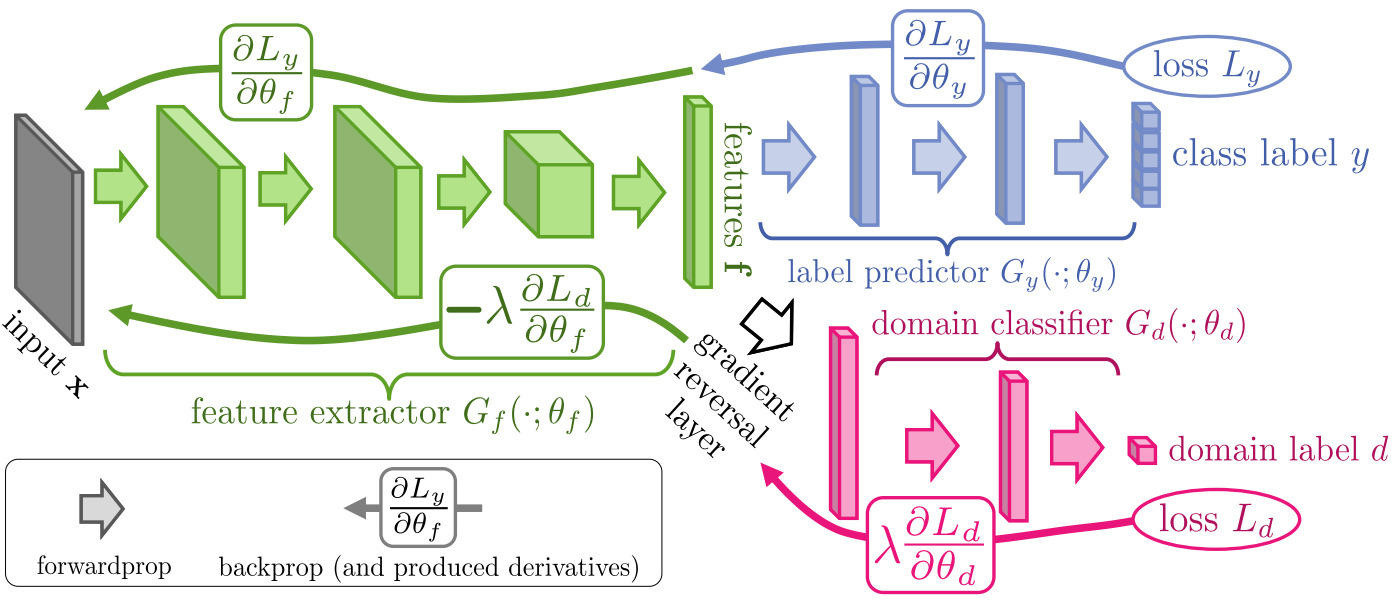

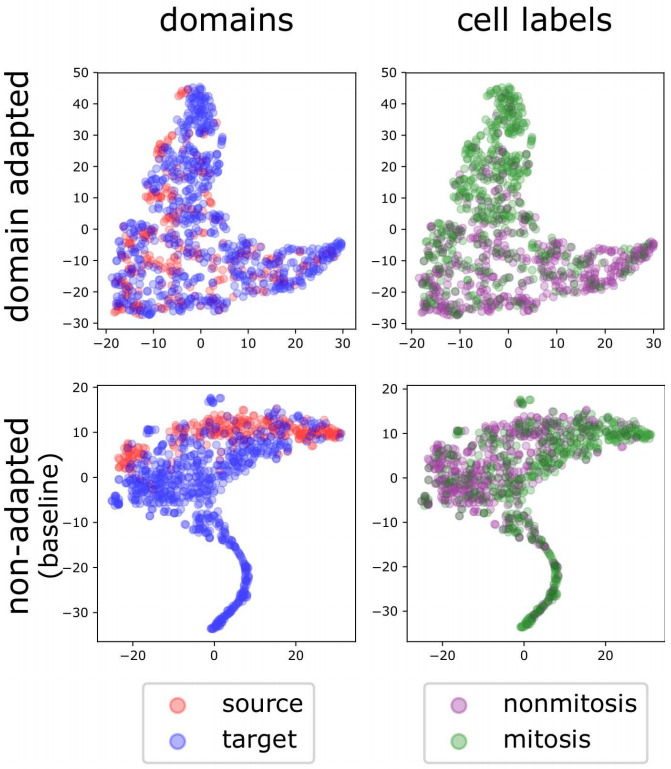

A domain adversarial module is added to an existing model. The goal of this classifier is to predict whether an image belongs to the source or the target domain. A gradient reversal layer connects this module to the existing networking so that training optimizes the original task and encourages the network to learn domain-invariant features.

During training, labeled images from the source domain and unlabeled ones from the target domain are used. For labeled source images, both the loss of the original network and the domain loss are applied. For unlabeled target images, only the domain loss is used.

This module can be added to a variety of deep learning models. For classification, it is typically connected to a layer near the output. For segmentation, it is usually applied to the bottleneck layer - although it can also be applied to multiple layers. For detection, it can be applied to the feature pyramid network. For challenging object detectors like mitoses that require an additional classifier network, the domain adversarial network may only be applied to the second stage [Aubreville2020a].

4. Adapt Model at Test Time

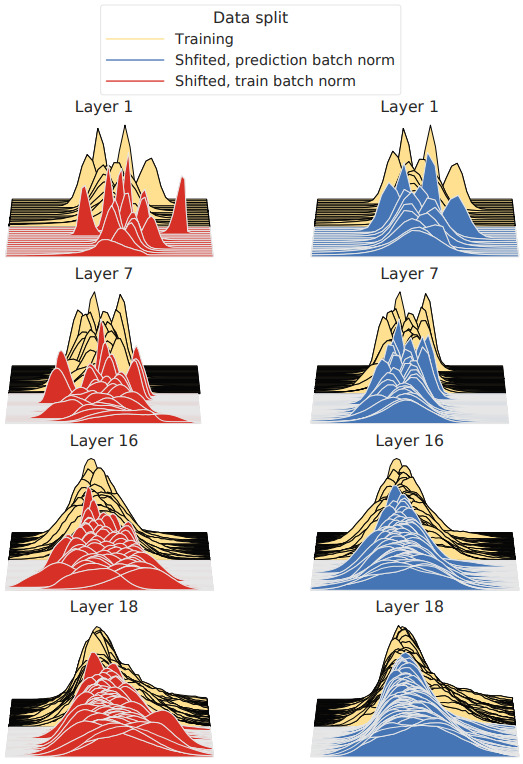

Instead of accommodating domain shifts during training, the model may be modified at test time. Domain shifts are reflected in a change in distribution in feature space – a covariate shift. So for models using batch normalization layers, the mean and standard deviation may be recalculated for a new test set.

These new statistics can be calculated over the whole test set and updated in the model before running inference. Or they can be calculated for each new batch of data. Nado et al. found that the latter approach, called prediction-time batch normalization, was sufficient [Nado2020]. Further, a single batch of 500 images was sufficient to get a substantial improvement in model accuracy.

5. Model Finetuning

Finally, a model may be finetuned on a test set with a domain shift [Aubreville2020b]. If enough labeled examples are available in the test set, this approach is likely to produce the best result. However, it is the most time-consuming and least generalizable. The model may need to be finetuned again on other test sets in the future.

Comparison of Approaches

Color augmentation and stain normalization are used extensively in pathology image applications, especially for whole slide H&E images. Domain adversarial training and model adaptation at test time are less studied thus far.

Stain Normalization vs. Color Augmentation

Khan et al. studied stain normalization and color augmentation when applied individually and together [Khan2020]. The best results were obtained when the methods were used together.

Tellez et al. tested different combinations of image augmentation and normalization strategies for a variety of different histology classification tasks [Tellez2019]. The best configuration turned out to be randomly shifting the color channels and applying no stain normalization. Experiments using stain normalization performed only slightly poorer. This validates the importance of image augmentation in creating a robust classifier for histology and emphasizes the importance of color transformations. While applying stain normalization didn’t really hurt, this extra computation may not be necessary.

Stain Normalization vs Domain Adversarial Training

Ren et al. compared domain adversarial training with some stain normalization and color augmentation approaches, demonstrating that the domain adversarial approach was superior for generalizing to new image sets [Ren2019].

Stain Normalization vs Color Augmentation vs Domain Adversarial Training

Larfarge et al. performed a similar study that also included domain adversarial training. They compared domain adversarial training with color augmentation and stain normalization on mitosis classification and nuclei segmentation tasks [Lafarge2019].

On mitosis classification, they found that color augmentation performed best for test images from the same lab on which the model was trained. However, on images from other labs, domain adversarial training combined with color augmentation was best.

For nuclei segmentation, the results were a bit different. Stain normalization was key when tested on images of the same tissue type. On different tissue types, domain adversarial training with stain normalization was best.

Clearly domain adversarial training was beneficial for both types of domain shifts!

However, the best preprocessing and augmentation strategy varied with the dataset. Lafarge speculated that this is due to the amount of domain variability in the training set.

The stain normalization methods tested in the above analysis of methods are only the traditional color matching and stain separation techniques. Many newer deep learning-based approaches exist that were not included in these benchmarks.

Recommendations

Traditional stain normalization techniques are worth trying as a first pass as they’re easier to implement and often faster to run. For some domain shifts, these may even be sufficient, especially when combined with color augmentation or domain adaptation. Experiments with a simpler method can also provide insights into whether some amount of stain normalization will improve model generalization performance. For more robust stain normalization that preserves tissue structure, evaluate the methods discussed above.

The available data may also be a deciding factor in selecting appropriate techniques. Stain normalization and color augmentation do not require target domain images during training, while the other three approaches do. Model adaptation requires unlabeled target data, while finetuning needs it labeled. For these reasons, stain normalization and color augmentation are often tried first, with adversarial domain adaptation included when needed. These three methods are also the best approaches for training a single generalizable model. If a large set of target images is available, then adapting the model (with unlabeled data) or finetuning (with labeled data) will likely be most effective.

Monitoring a deployed system for unexpected domain shifts is also critical. Stacke et al. developed a way to quantify domain shift [Stacke2020]. Their metric does not require annotated data, so can serve as a simple test to see if new data is likely to be handled well by an existing model.

Want to receive regular computer vision insights for pathology delivered straight to your inbox?

Sign up for Computer Vision Insights

References

[Aubreville2020a] M. Aubreville, C.A. Bertram, S. Jabari, C. Marzahl, R. Klopfleisch, A. Maier, Inter-species, inter-tissue domain adaptation for mitotic figure assessment (2020), arXiv preprint arXiv:1911.10873

[Aubreville2020b] M. Aubreville, C.A. Bertram, T.A. Donovan, C. Marzahl, A. Maier, R. Klopfleisch, A completely annotated whole slide image dataset of canine breast cancer to aid human breast cancer research (2020b), Scientific Data

[Aubreville2021] M. Aubreville, C. Bertram, M. Veta, R. Klopfleisch, N. Stathonikos, K. Breininger, N. ter Hoeve, F. Ciompi, A. Maier, Quantifying the Scanner-Induced Domain Gap in Mitosis Detection (2021), arXiv preprint arXiv:2103.16515

[Cho2017] H. Cho, S. Lim, G. Choi, H. Min, Neural stain-style transfer learning using gan for histopathological images (2017), arXiv preprint arXiv:1710.08543

[Faryna2021] K. Faryna, J. van der Laak, G. Litjens, Tailoring automated data augmentation to H&E-stained histopathology (2021), Medical Imaging with Deep Learning

[Ganin2016] Y. Ganin, E. Ustinova, H. Ajakan, P. Germain, H. Larochelle, F. Laviolette, M. Marchand, V. Lempitsky, Domain-adversarial training of neural networks (2016), The Journal of Machine Learning Research

[Khan2014] A.M. Khan, N. Rajpoot, D. Treanor, D. Magee, A Nonlinear Mapping Approach to Stain Normalization in Digital Histopathology Images Using Image-Specific Color Deconvolution (2014), IEEE Transactions on Biomedical Engineering

[Khan2020] A. Khan, M. Atzori, S. Otálora, V. Andrearczyk, H. Müller, Generalizing convolution neural networks on stain color heterogeneous data for computational pathology (2020), Medical Imaging

[Lafarge2019] M.W. Lafarge, J.P. Pluim, K.A. Eppenhof, M. Veta, Learning domain-invariant representations of histological images (2019), Frontiers in Medicine

[Lo2021] Y.C. Lo, I.F. Chung, S.N. Guo, M.C. Wen, C.F. Juang, Cycle-consistent GAN-based stain translation of renal pathology images with glomerulus detection application (2021), Applied Soft Computing

[Macenko2009] M. Macenko, M. Niethammer, J.S. Marron, D. Borland, J.T. Woosley, X. Guan, C. Schmitt, N.E. Thomas, A method for normalizing histology slides for quantitative analysis (2009), International Symposium on Biomedical Imaging

[Nado2020] Z. Nado, S. Padhy, D. Sculley, A. D’Amour, B. Lakshminarayanan, J. Snoek, Evaluating prediction-time batch normalization for robustness under covariate shift (2020), arXiv preprint arXiv:2006.10963

[Reinhard2001] E. Reinhard, M. Adhikhmin, B. Gooch, P. Shirley, Color transfer between images (2001), Computer Graphics and Applications

[Ren2019] J. Ren, I. Hacihaliloglu, E.A. Singer, D.J. Foran, X. Qi, Unsupervised domain adaptation for classification of histopathology whole-slide images (2019), Frontiers in Bioengineering and Biotechnology

[Salehi2020] P. Salehi, A. Chalechale, Pix2pix-based stain-to-stain translation: A solution for robust stain normalization in histopathology images analysis (2020), International Conference on Machine Vision and Image Processing

[Stacke2020] K. Stacke, G. Eilertsen, J. Unger, C. Lundström, Measuring domain shift for deep learning in histopathology (2020), Journal of Biomedical and Health Informatics

[Tellez2018] D. Tellez, M. Balkenhol, N. Karssemeijer, G. Litjens, J. van der Laak, F. Ciompi, H and E stain augmentation improves generalization of convolutional networks for histopathological mitosis detection (2018), Medical Imaging

[Tellez2019] D. Tellez, G. Litjens, P. Bándi, W. Bulten, J.M. Bokhorst, F. Ciompi, J. van der Laak, Quantifying the effects of data augmentation and stain color normalization in convolutional neural networks for computational pathology (2019), Medical Image Analysis

[Vahadane2016] A. Vahadane, T. Peng, A. Sethi, S. Albarqouni, L. Wang, M. Baust, K. Steiger, A.M. Schlitter, I. Esposito, N. Navab Structure-Preserving Color Normalization and Sparse Stain Separation for Histological Images (2016), IEEE Transactions on Medical Imaging

[Zhu2017] J.Y. Zhu, T. Park, P. Isola, A.A. Efros, Unpaired image-to-image translation using cycle-consistent adversarial networks (2017), Proceedings of the International Conference on Computer Vision